Google has recently launched a new subscription type BigQuery subscriptions using which PubSub can directly integrate with BigQuery. This was previously achieved by writing a dataflow as a middleware application.

Before we go into the details of how this is done. Let me give you a quick glance of what these services are.

Google PubSub

As the name suggests its a managed service provided by Google based on the publish subscribe design pattern. It is widely used in asynchronous communication between applications. Google internally uses this service in products like Gmail to send millions of messages per second.

BigQuery

It’s a serverless and highly scalable data warehouse service offered by Google. It can easily handle several petabytes of data, deriving analytics and business critical information in seconds.

Dataflow

Google cloud dataflow is a fully managed data processing service used for batch and realtime data streaming pipelines written using Apache Beam. Due to its design of processing data in parallel huge amount of data can be processed in within seconds.

In order to learn more about these services you can refer to the google documentation.

On the July 25, 2022 Google announced a new feature where PubSub can directly write messages to a BigQuery table using the BigQuery Subscriptions.

This comes handy in the following scenarios

- Writing data to BigQuery to generate metrics for analytics ( For example, sending usage data on an e-commerce website)

- Exporting logs to BigQuery asynchronously.

Following are the steps to accomplish the setup.

- Create a BigQuery table with/without fields representing the pubSub message metadata.

- Create a PubSub Schema identical to the schema of the BigQuery table created in the first step.

- Create a PubSub topic with the schema created in step 2.

- Update the pubSub service account.

- Create a pubSub Subscription.

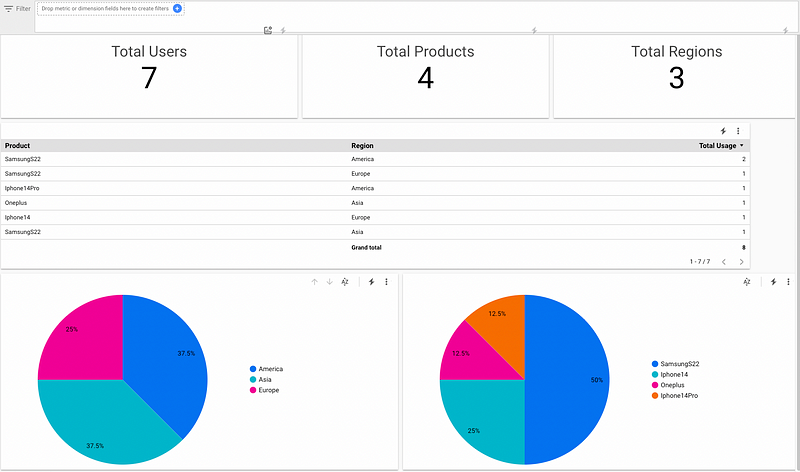

In order to understand this in depth let us consider an example. Imagine an e-commerce website wants to understand which product is popular amongst its users. For this use case it publishes an event to the topic whenever a user clicks on a particular product. It can then analyze the data in BigQuery or create a dashboard to understand the statistics of its products.

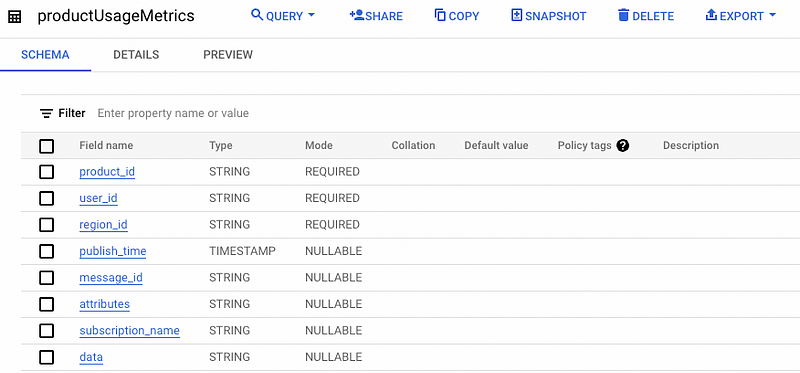

Step 1: Create a BigQuery table

Google leverages the PubSub schema definition and BigQuery Table definition to map the data between these two services. BigQuery tables need to be defined with each column having the same name and compatible datatype as to the PubSub schema fields.

In our example we create a table which has the following attributes.

- user_id: This is the customer that clicks the product page.

- product_id: This identifies the products on an e-commerce website

- region_id: The location from where the customer belongs.

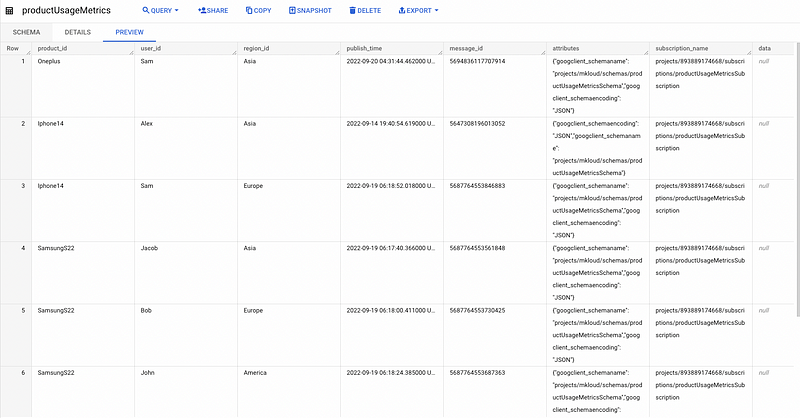

Along with the above three attributes we can also enable the message metadata to be saved in additional columns created in a BigQuery table.

In the snapshot we can see that we have columns other than the three mentioned above. These columns represent the metadata of the message. Capturing the metadata can have its own benefits. For instance PubSub might send duplicate messages due to its at least once delivery property, this will create duplicate records in BigQuery which can be identified using the message_id column.

Also do note that all the additional columns should have the NULLABLE mode. Additionally, when you enable the option to write metadata from the message to these columns you cannot have partial columns. You need to have all 5 columns in the BigQuery table.

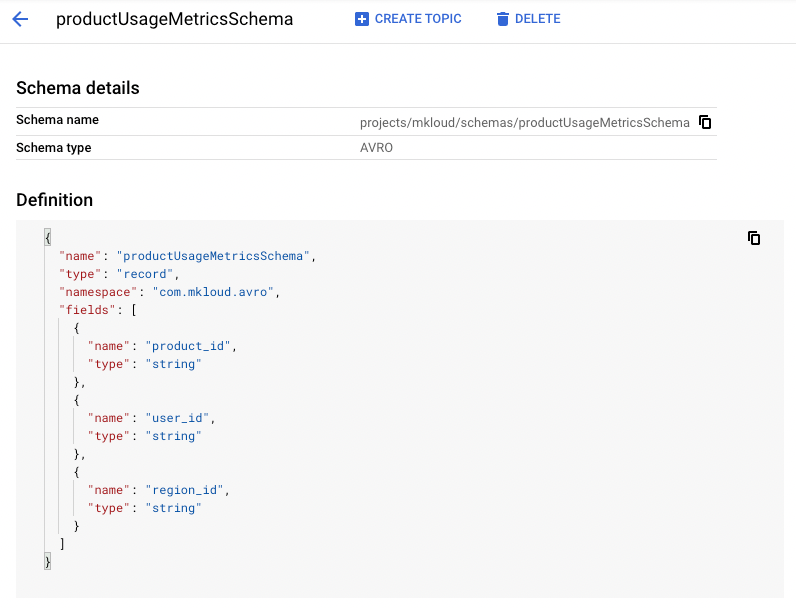

Step 2: Create PubSub Schema

PubSub schemas are defined in Apache Avro or Protocol Buffer format. The schema should not contain any fields representing the metadata of the PubSub message. The fields you want to integrate with BigQuery must have the same name and its datatype should be compatible.

In our example the schema is pretty simple with only 3 fields which we have defined for the BigQuery table. If there are additional fields present in the schema which are not present in the table, those fields can be dropped using the BigQuery subscription property Drop unknown fields.

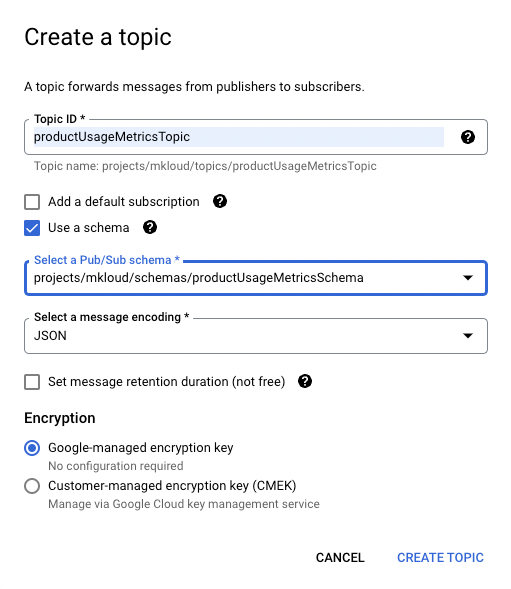

Step 3: Create PubSub Topic

In this step we create a topic with the option Use a schema. We select the schema we created in step 2.

Note: Do not check the Add a default subscription. We will be creating a subscription in step 5.

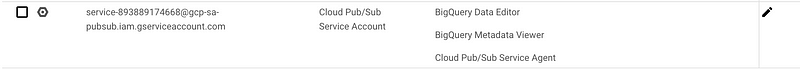

Step 4: Update PubSub service account

PubSub creates and manages a service account for each project. The PubSub service account would need access to write to the BigQuery table and to read the table metadata.

The following roles need to be added to the service account.

- BigQuery Data Editor (roles/bigquery.dataEditor)

- BigQuery Metadata viewer (roles/bigquery.metadataViewer)

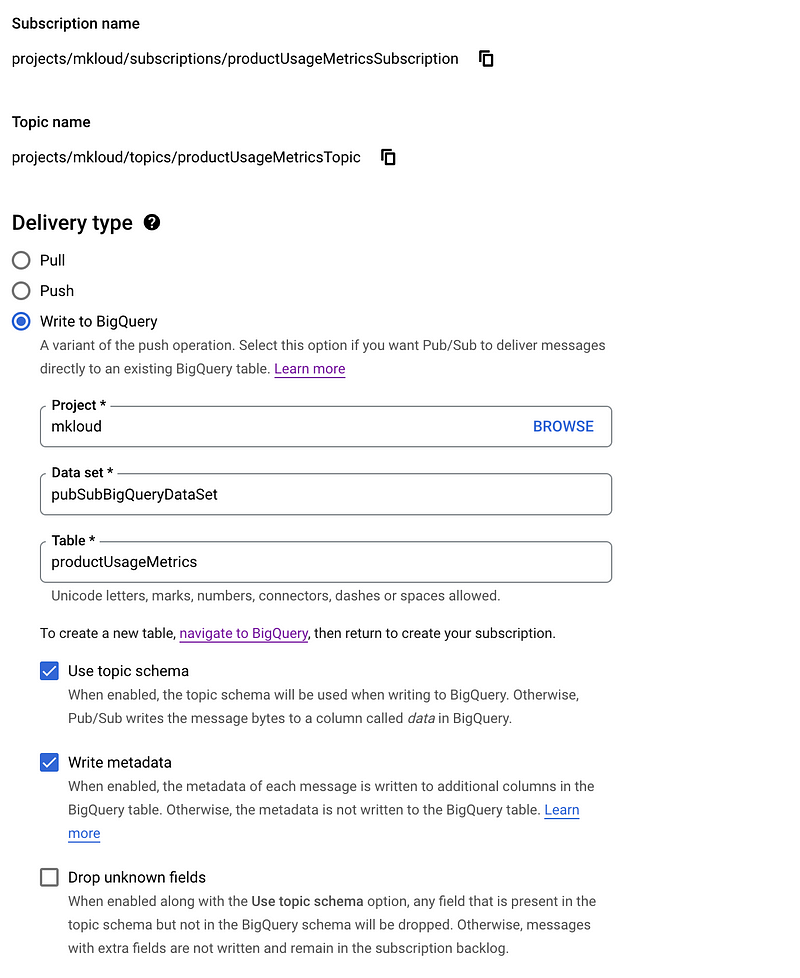

Step 5: Create PubSub subscription

In this last step we need to make sure to join all the dots. A PubSub subscription would only be successfully created if the BigQuery table and PubSub schema have been created accurately. Also if the service account does not have the write permissions, the subscription would throw an exception while creation.

There are three important properties we need to understand while creating the subscription.

- Use Topic Schema: When this option is enabled, PubSub maps the PubSub schema fields to the BigQuery columns. If this option is not enabled, PubSub writes the entire message to the BigQuery column data with datatype BYTES or STRING.

- Write Metadata: This option enables PubSub to write metadata to the additional fields we saw in step 1.

- Drop Unknown fields: This option is used with Use Topic Schema option. If enabled, lets PubSub drop any field that is present in the topic schema but not in the BigQuery table. Without this option, messages with extra fields are not written to the table and remain in the subscription backlog.

The above snapshot shows the important points that we need to set in case of BigQuery subscription. All the other settings can be set according to one’s choice.

Note: When a PubSub message cannot be written to BigQuery, it remains unacknowledged and can be handled by configuring a dead letter topic.

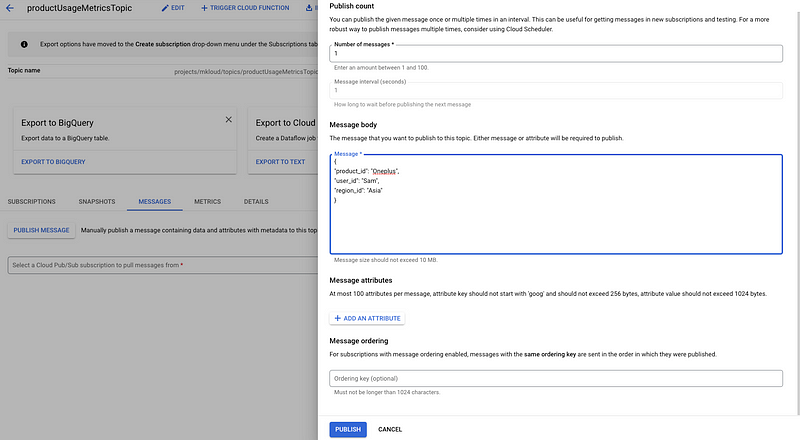

Now we have everything in place. So if you try to post a message on PubSub you should instantaneously see a new record being added to your BigQuery table.

This makes it super easy for applications to dump data in BigQuery for analytical purposes.

We can also use DataStudio to further create dashboards on top of this data for a holistic view.

I hope this helps to understand how this design can be leveraged. Do let me know the use-case where you think this would fit right. Additional you can find more information on this at https://cloud.google.com/pubsub/docs/bigquery